AI Solutions

The Hailo-8™ edge AI processor, featuring up to 26 tera-operations per second (TOPS), significantly outperforms all other edge processors. Its area and power efficiency are far superior to other leading solutions by a considerable order of magnitude – at a size smaller than a penny even including the required memory. With an architecture that takes advantage of the core properties of neural networks, our neural chip allows edge devices to run deep learning applications at full scale more efficiently, effectively, and sustainably than other AI chips and solutions, while significantly lowering costs.

About Hailo

Hailo is a leading developer of best-performing artificial intelligence (AI) processors for edge devices. Its unique technologies enable AI processors that are compact, extremely energy efficient, and powerful enough to compute and interpret vast amounts of data in real-time.

The Hailo AI processors are designed for a wide range of smart devices with applications in automotive, smart cities, smart retail, industry 4.0 and many others.

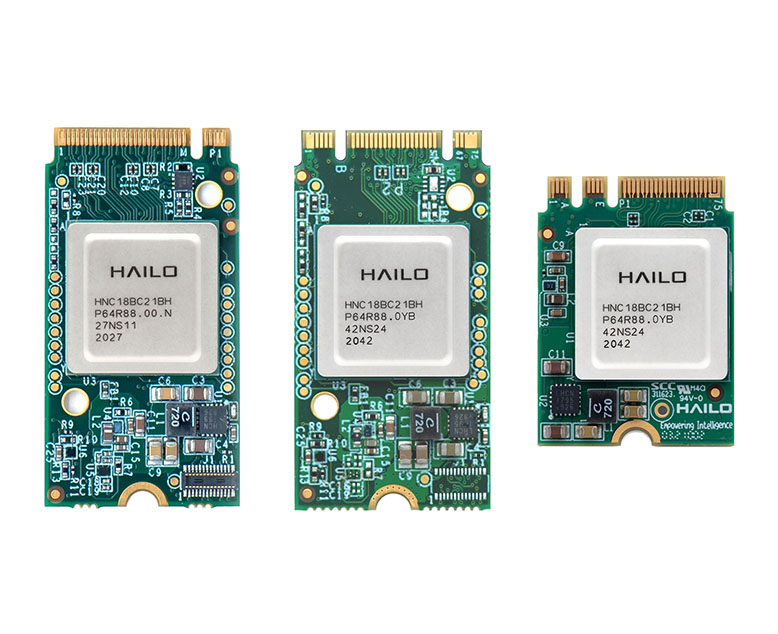

Hailo-8™ M.2 AI Acceleration Module

The Hailo-8™ M.2 Module is an AI accelerator module for AI applications, compatible with NGFF M.2 form factor M, B+M and A+E keys. The AI module is based on the 26 tera-operations per second (TOPS) Hailo-8™ AI processor with high power efficiency. The M.2 AI accelerator features a full PCIe Gen-3.0 2-lane interface (4-lane in M-key module), delivering unprecedented AI performance for edge devices. The M.2 module can be plugged into an existing edge device with M.2 socket to execute in real-time and with low power deep neural network inferencing for a broad range of market segments. Leveraging Hailo’s comprehensive Dataflow Compiler and its support for standard AI frameworks, customers can easily port their Neural Network models to the Hailo-8 and introduce high-performance AI products to the market quickly.

Please contact us for more information.

Features

| Form Factor | NGFF M.2 Key M | NGFF M.2 Key B+M | NGFF M.2 Key A+E |

|---|---|---|---|

| AI Processor | Hailo-8™ AI processor with up to 26 TOPS and best-in-class power efficiency | ||

| Dimensions | 22 x 42 mm with breakable extensions to 22 x 60 and 22 x 80 mm | 22 x 30 mm | |

| Interface | PCIe Gen-3.0, 4 lanes (Up to 32Gbps) | PCIe Gen-3.0, 2 lanes (Up to 16Gbps) | |

| Supported Frameworks | TensorFlow and ONNX | ||

| Supported OS | Linux, Windows (coming soon) | ||

| Certification | CE, FCC | ||

Hailo-8™ Mini PCIe AI Acceleration Module

The Hailo-8™ Mini PCIe Module is an AI accelerator module for AI applications, compatible with PCI Express Mini (mPCIe) form factor. The Mini PCIe AI accelerator delivers industry-leading AI performance for edge devices – up to 13 tera-operations per second (TOPS) with industry-leading power efficiency. The module can be plugged into an existing edge device with an mPCIe Full-Mini socket to execute in real-time and with low-power deep neural network inferencing for a broad range of market segments. Leveraging Hailo’s comprehensive Dataflow Compiler and its support for standard AI frameworks, customers can easily port their Neural Network models to the Hailo-8 and introduce high-performance AI products to the market quickly.

Features

- Performance: up to 13 TOPS

- Best-in-class power efficiency

- Supported Frameworks: TensorFlow and ONNX

- Form Factor: PCI Express Mini (mPCIe)

- Dimensions: 30 x 50.95 mm

- Interface: PCIe Gen-3.0, 1-lane

- Supported OS: Linux, Windows (coming soon)

- Certification: CE, FCC

Please contact us for more information.

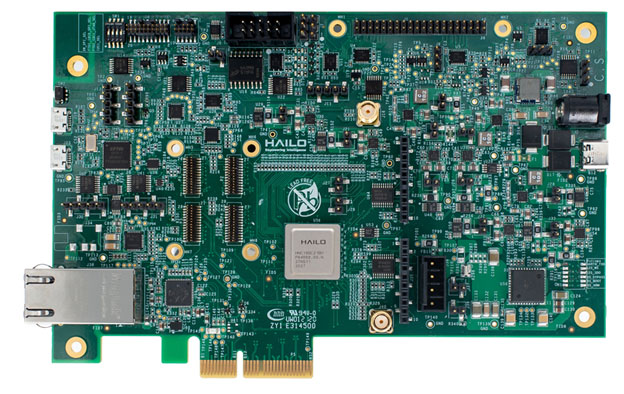

Hailo-8™ Evaluation Board

The Hailo-8 Evaluation Board (EVB) provides a proven design to evaluate the Hailo-8 AI processor. The EVB allows easy access to all peripherals necessary for development, testing and debugging.

Features

- Demonstration of the capabilities and KPIs of the Hailo-8 edge AI processor

- Fast evaluation and prototyping using out-of-the-box deep learning examples

- Accelerated development with the Hailo Dataflow Compiler

- Bit-exact SW emulator

- Profiling resource utilization and power consumption of target networks

- Easy deployment of proprietary deep learning algorithms

- Seamless integration with popular software frameworks

- Industry-standard NN examples typically used in AI hardware acceleration benchmarks

- Easy connection to existing customers’ platforms for quick proof of concept

Please contact us for more information.